-

OSGeo Student Awards

Written by: Charlie Schweik · Posted on: October 06, 2017

OSGeo Student Awards for FOSS4G 2017, Boston

On behalf of GeoforAll, we are pleased to announce the winners of the OSGeo Student Awards that were given out at FOSS4G 2017, Boston. In this instance, the international GeoForAll Student Award Review Committee decided to give the awards to students who were single or initial authors on submitted papers that made it through a rigorous peer-review process and were accepted for publication in the FOSS4G 2017 Academic Proceedings [1].

This year’s winners are:

1st place: Hyung-Gyu Ryoo and Soojin Kim, Department of Computer Science and Engineering, Pusan National University, South Korea, for:

- Ryoo, Hyung-Gyu; Kim, Soojin; Kim, Joon-Seok; and Li, Ki-Joune (2017) “Development of an extension of GeoServer for handling 3D spatial data,” Free and Open Source Software for Geospatial (FOSS4G) Conference Proceedings: Vol. 17, Article 6.

- Available at: http://scholarworks.umass.edu/foss4g/vol17/iss1/6

2nd place: Mohammed Zia, Geomatics Engineering Department, Istanbul Technical University, Turkey, for:

- Zia, Mohammed; Cakir, Ziyadin; and Seker, Dursun Zafer (2017) “A New Spatial Approach for Efficient Transformation of Equality - Generalized Traveling Salesman Problem (GTSP) to TSP,” Free and Open Source Software for Geospatial (FOSS4G) Conference Proceedings: Vol. 17, Article 5.

- Available at: http://scholarworks.umass.edu/foss4g/vol17/iss1/5

3rd place: Lorenzo Booth, School of Engineering, University of California, Merced, CA, USA, for

- Booth, Lorenzo; and Viers, Joshua H. “Implementation of a large-scale, interactive agricultural water balance model using R and GDAL.”

- (Note: At the authors’ request, this was not included in the final conference proceedings.)

In addition to the distinction of these commendations, the students received cash awards of $500, $300, and $200, respectively. We congratulate these scholars for their contributions.

The student awards are possible thanks to the support of the Open Source Geospatial Foundation and because of the efforts of the Student Award Review Committee and to the broader FOSS4G 2017 Academic Program Committee [2].

— Charlie Schweik, FOSS4G 2017 Academic Committee co-chair

[1] http://scholarworks.umass.edu/foss4g/vol17

[2] https://wiki.osgeo.org/wiki/FOSS4G_2017#Academic_Committee

- Ryoo, Hyung-Gyu; Kim, Soojin; Kim, Joon-Seok; and Li, Ki-Joune (2017) “Development of an extension of GeoServer for handling 3D spatial data,” Free and Open Source Software for Geospatial (FOSS4G) Conference Proceedings: Vol. 17, Article 6.

-

FOSS4G Abstract Selection Process

Written by: Andy Anderson · Posted on: June 05, 2017

FOSS4G Presentations: Multiple Rounds of acceptance

Basic Considerations

There are many considerations that went into the Program Committee’s decisions about which abstracts to accept for this year’s conference, as described previously by FOSS4G ’17 Conference Chair Michael Terner. The most basic one, of course, is that we could only select roughly 250 oral presentations and 50 posters out of the 424 abstracts submitted, due to space and time constraints. But beyond that we wanted a quality program that was comprehensive with respect to the many applications of FOSS4G, and that reflected the diversity of people in our Community: from users to developers, from the renowned to the lesser-known, from business to government to the academy, and from North America to the rest of the world.

The selection process for oral presentations therefore proceeded in multiple rounds, producing 251 acceptances over a five-week period from March 22 to April 28:

- The Program Committee selected its top 13 abstracts for early acceptance;

- The Community voted through an open process on its favorites, resulting in another 47 acceptances;

- The Academic Committee reviewed the 49 abstracts submitted for consideration as academic papers and selected another 30;

- A more methodical ranking of abstracts by the Program Committee generated a middle set of 90 acceptances;

- The Program Committee accepted another 46 that they considered of particular interest and with the additional goal of diversifying content;

- And finally, the Program Committee accepted another 25 abstracts with the main goal of diversifying speakers.

Another 58 abstracts were accepted for posters, 41 are on a waitlist, and 74 were rejected or withdrawn.

What follows is a somewhat idealized description of the process followed by the Program Committee.

Oral Presentations

1. Early Acceptance

As the Committee reviewed the abstracts, everyone noted the presentations that they found to be especially interesting. With members from academia, government, and business, including both developers and end-users, we certainly had a diversity of opinions! Nevertheless, when these top picks were collected there were 13 that showed up three or more times, and they received our first notifications of acceptance. Early acceptance was important so that we could begin showing program content early to prospective attendees while the rest of the deliberations proceeded.

2. Community Voting

Many of the readers of this post probably took part in Community Voting, where anyone could look through the abstracts, anonymously presented, and choose the ones of most interest to them, scoring them as either 1 (interested) or 2 (very interested). The Program Committee looked at the total scores or ranking and accepted the top 47 that weren’t previously accepted early.

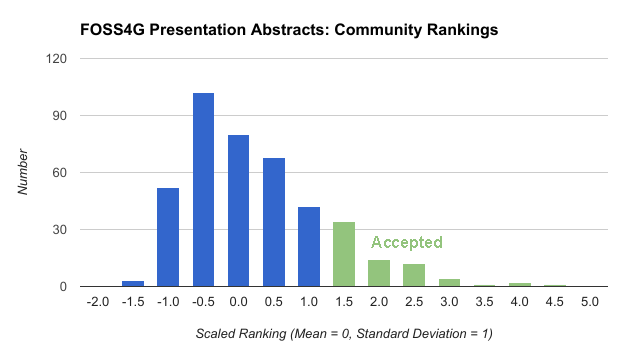

An interesting way of looking at the presentations is to scale their total Community ranking to a Z score = (ranking – mean)/stdDev, which has a mean of zero and a standard deviation of one, and plot a histogram:

The accepted presentations had a Z score of 1.08 or higher, in other words they were more than one standard deviation above the mean. The skew to the left indicates a diversity of interest in many talks that received relatively low scores.

3. Academic papers

The Academic Committee had their own selection process that had the additional consideration of whether the abstract would result in a good research paper. As described in a previous blog post, they accepted 30 presentations from the 49 academic abstracts submitted. Because this process occurred in parallel with the Program Committee and Community Voting, which reviewed all submissions, a number of the accepted academic presentations had the option of giving a regular oral presentation instead, and two submitters chose to take that route.

4. Methodical middle

The Program Committee developed a review and scoring system that considered the following characteristics of the abstracts:

Relevance — Does it apply free and open-source tools? Is it geographic in nature?

Credibility — Does the abstract make sense? Is it based on accepted tools and procedures?

Importance — Does it contribute to practice, research, theory, or knowledge? Is it of interest and useful to some portion of the FOSS4G audience? Is it about a common problem or situation that others are likely to have encountered?

Originality — Is this a cutting-edge development? An innovative approach? A new application? An unusual use case?

Practicality — Is the project described a reasonable approach? Is it replicable by others?

Reputation — Is the speaker widely known to the community? Do they have a reputation for quality presentations?

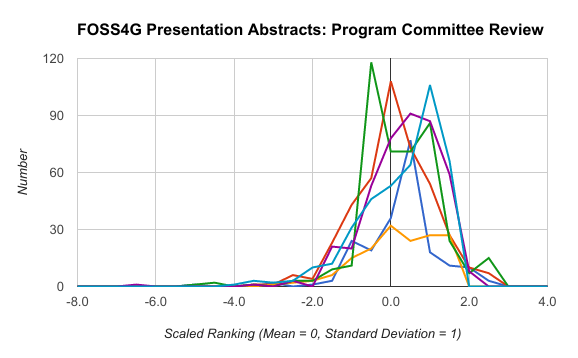

Each reviewer scored the abstracts on these characteristics, as consistently as possible so that their total score could be used to compare one talk with another. But even though there was a common understanding of these characteristics, each reviewer still had their own standards, with some being more generous than others and having higher mean scores. If a Z score is calculated for each reviewer’s set of evaluations, the variation of the distributions is noticeable:

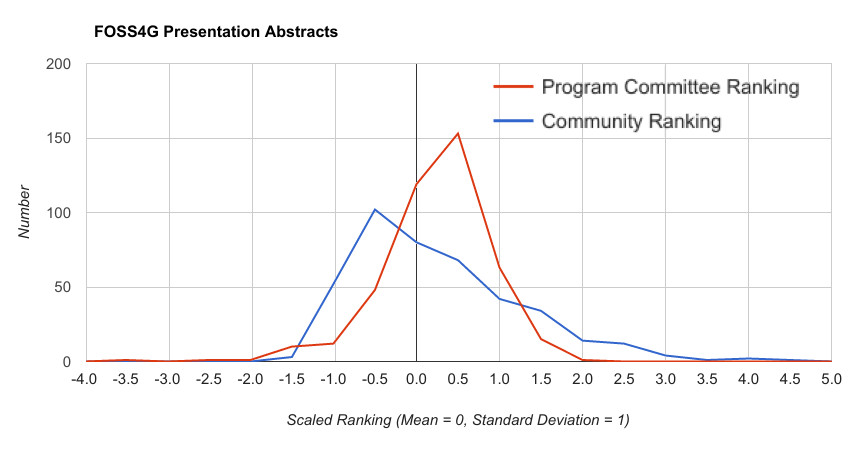

Because the Z scores place the reviewers on a more uniform foundation they can be averaged to produce a useful metric for comparing the presentations. The average Program Committee ranking can then be graphed together with the Community ranking for comparison. The former reveals a more positive skew, probably due to a more inclusive perspective on which topics should be included in the conference.

The Committee agreed to use the average Z score as a ranking metric to choose the middle portion of the presentations, and another 90 received the nod that ranged in Z from 0.1 to 1.3. In order to include as many voices as possible, we excluded from this group additional presentations from previously accepted speakers, as well as some from already-represented organizations that we didn’t feel were distinct enough.

5. Diversifying Content

At this point there were still a number of quality talks that the Program Committee thought were of particular interest, often because they had been flagged by one or two individuals for early acceptance or had been given an overall “accept for oral presentation” status from a majority of reviewers. The Committee also wanted to ensure that some presentations addressed the less represented areas of FOSS4G development and use. At this juncture, we also considered the additional submissions from speakers or organizations that had already had a talk accepted. We agreed that a number of speakers had two strong abstracts worthy of acceptance, and a number of organizations could also contribute more without over-representation. In total, another 46 presentations were accepted.

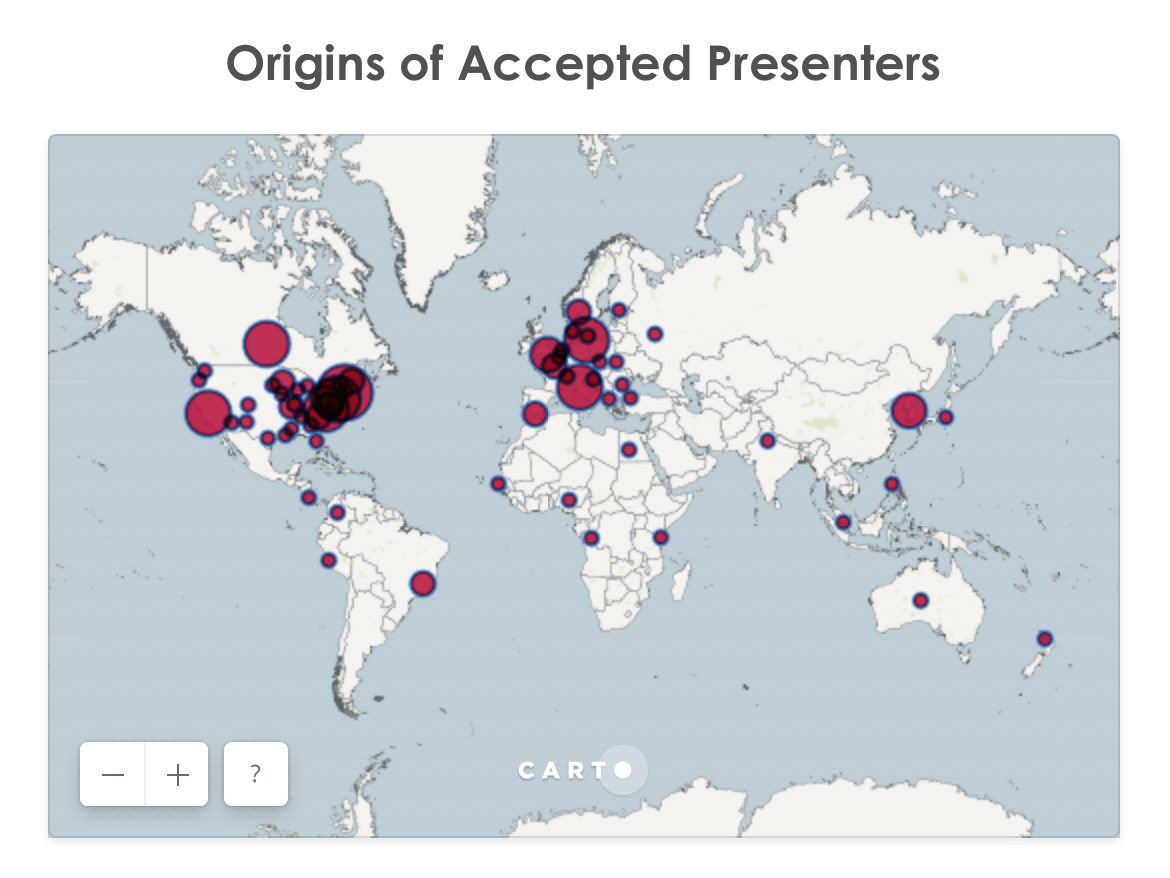

6. Diversifying Speakers

Finally, the Program Committee analyzed the distribution of speakers to ensure there was a diversity of voices. In particular we wanted to make sure that the gender and geographic distribution of accepted presentations were not too far away from the overall ratio of submissions. Gender, as it turned out, was not an issue, with 18% of the accepted abstracts already coming from women, who submitted 14% of the abstracts. Geography was not as simple, however, and we took another look at abstracts from underrepresented regions to see if we missed submissions of interest. This allowed us to increase the accepted presentations to the vicinity of the submitted fraction in every region:

Region % Accepted : % Submitted South Asia 0.4% : 0.2% Middle East and North Africa 0.8% : 1.2% Sub-Saharan Africa 1.6% : 1.2% Australia 1.6% : 1.4% Latin America 2.8% : 3.1% East Asia 5.2% : 5.8% Europe 25.7% : 28.7% North America 61.8% : 58.3%

We also checked up on conference sponsors to see if they had at least one accepted presentation, which they all already did. In this final round another 25 presentations were accepted.

The final result, 251 out of 424 abstracts accepted for oral presentations, represents a 59% acceptance rate.

Posters

Abstracts that didn’t make the above cut were then considered for posters, in particular if their topic would lend itself well to a printed presentation. The Academic Committee chose 15 posters, and the Program Committee added another 46. Three of the presenters accepted for academic posters and that were also selected for oral presentations by the Program Committee opted for the latter, resulting in 58 posters or 14% of the total.

Waitlist

A number of the remaining abstracts were put on a waitlist for possible later acceptance, as we refine the number of presentations we can accommodate, the organization of the topic sessions, and allow for some inevitable attrition within the accepted papers. There are 41 submissions in this category, or 10%.

Rejections

Relatively few abstracts were rejected outright. Of these, several were duplicate submissions; a few were uniformly rejected by the Program Committee or the Academic Committee based on scoring criteria; and the remainder were submissions from one speaker or organization that had another talk accepted and weren’t distinctive. There were 73 submissions that were rejected (17%), and one submission that was withdrawn early on in the process.

In Conclusion

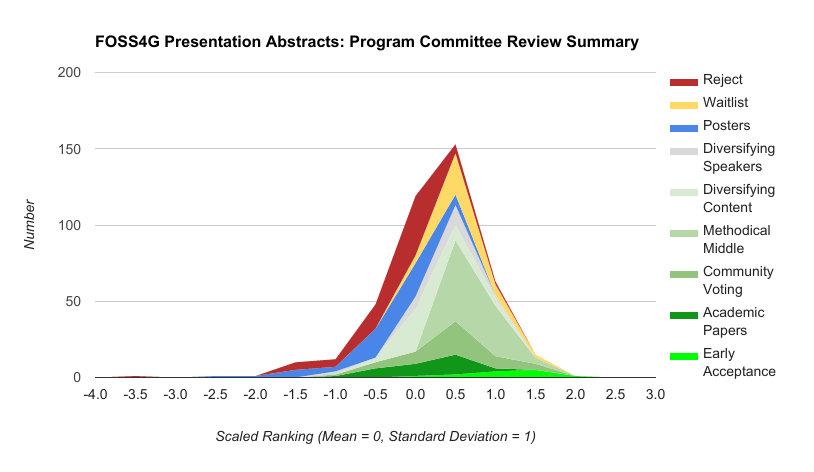

The overall process can be visualized in this histogram of the number of presentations of each type according to their scaled ranking (Z score):

As we hope you will agree, the Program Committee took great care to use an even-handed approach that fairly represented the many technologies and people that make up the FOSS4G community. With such a wealth of quality submissions and a limited amount of time and space to present them all, accepting only a fraction was a necessary but difficult task. It was also one that we found to be both interesting and rewarding, and we are very proud of the result. We greatly appreciate the many people who took the time to submit their abstracts.

We invite everyone to Boston in August for an awesome FOSS4G! You can learn more about the accepted presentations here.

(NB: Since finalizing these results, a few of the accepted presentations were withdrawn due to authors not being able to attend, and a few have changed categories, e.g. from oral presentation to poster. Additional changes will undoubtedly be forthcoming. Therefore, the final numbers in the program will differ somewhat from the snapshot presented above.)

-

FOSS4G Academic Program

Written by: Charlie Schweik & Mohammed Zia · Posted on: May 08, 2017

FOSS4G Academic Track and Review Process

With a changing world tide, especially in science and technology, we have to listen to and involve as many communities as possible to create a fit-for-all geo-solution. The world problems have evolved and so should our approach.

There is an utmost requirement for the Open-Source community to bring in scientists, researchers, developers, end users, and almost all tiers of the geospatial paradigm to confront and answer current geo-challenges plaguing our planet Earth and humanity. The FOSS4G 2017 Conference will be an important bridge between academia and industry that leads to new ideas and innovations.

To highlight the top quality academic work that is motivated by and is carried out with FOSS4G data and software, the Conference solicited academic papers in association with oral presentations. The FOSS4G 2017 Academic Committee consisted of 21 individuals having a wide range of expertise in the development and application of FOSS4G technologies, and with academic backgrounds that generally included refereeing papers submitted to journals. They were charged with reviewing the academic abstracts and selecting the best of them for further evaluation of the written article. These papers will be published in the Conference Proceedings, but the best of the papers will also be promoted for consideration for publication in an internationally known GIS journal (yet to be confirmed).

There were 49 academic abstracts amongst the 416 all-conference submissions, and after double-blind review the Academic Committee selected 30 for acceptance as papers. Another 14 were considered worthy for inclusion as posters (which will also be published in the Proceedings). The remaining abstracts were rejected for not meeting the Committee’s agreed upon standards (one was a duplicate).

The FOSS4G 2017 Academic Committee developed a set of criteria for assessing the academic abstracts, and three committee members were assigned to review each one based on their areas of expertise, with no identifying information included.

The reviewers evaluated their assigned abstracts along five components from 1 (worst) to 5 (best):

- Originality

- Technical Quality

- Relevance to FOSS

- Relevance to Geography

- Academic Interest

The reviews for each of these components were averaged together for each abstract as a tertiary guide for review of abstracts at the margins. The resulting abstract components were also averaged together with a uniform weight to provide a single Component Composite, as a secondary guide.

The reviewers also provided a summary Final Recommendation, which was averaged together for each abstract using a linear numeric scale:

- Strong Accept (5)

- Borderline Accept (4)

- Accept as Poster (3)

- Borderline Reject (2)

- Strong Reject (1)

As might be expected, there is a high correlation between the Final Recommendation and the Component Composite, 0.79.

In the Acceptance List, the abstracts were sorted by their Final Recommendation Average and converted back to the text recommendations above; + or – were added to each if they were an integer ± 1/3 (indicating non-consensus), with the following results:

Recommendation Number Decision Strong Accept 5 Paper Strong Accept - 10 Paper Borderline Accept + 5 Paper Borderline Accept 7 Paper Borderline Accept - 7 3 Paper, 4 Poster Accept as Poster + 8 Poster Accept as Poster 1 Poster Accept as Poster - 1 Reject Borderline Reject + 2 1 Poster, 1 Reject Borderline Reject 1 Reject Strong Reject 2 Reject There were 27 abstracts that were “Borderline Accept” or better, meaning a consensus on acceptance. The Co-Chairs also looked at the next level of 7 that were “Borderline Accept –”, and using the component evaluations and reviewer comments noted a distinction that led to three more being accepted for paper submission, for 30 all together.

The next group of abstracts were considered for posters in the same way, with 9 that were “Accept as Poster” or better. Consideration of the components and reviewer comments led to the acceptance of one “Borderline Reject +” as a poster, and rejecting one “Accept as Poster –”, along with the remaining five abstracts.

The Academic submissions were also reviewed by the Program Committee and were also voted on by the Community, sometimes with different results reflecting the perspectives and interests of these more diverse groups. When a paper was accepted for a regular oral presentation, the academic submitters were notified of this and were provided with the opportunity to withdraw from the Academic Program with its requirement for an academic paper or poster.

In Conclusion

We deeply appreciate all those who took the time to submit an abstract for consideration by the Academic Committee, and all of the reviewers for their time and effort for arranging and undertaking this review. Of course, no process is free from false negatives and the process we describe above is no exception. We understand that a few abstract submitters will be upset with their result, but we hope the above explains that we did our best to run a selection process that was unbiased, fair, used peer-review expertise, and was systematic in its selection. This was no easy task on our end, and we do believe that the FOSS4G community will support the decisions at this stage, as well in later stages when we review the submitted, accepted papers in June.

Contact information

Please feel free to get back to us for any kind of assistance. We are always at your disposal.

Prof. Dr. Charlie M. Schweik Chair of FOSS4G Boston 2017 Academic Committee Email: cschweik@pubpol.umass.edu

Mohammed Zia Co-Chair of FOSS4G Boston 2017 Academic Committee Email: mohammed.zia33@gmail.com

Thanks again, you all, for making FOSS4G 2017 a reality. We hope to see you in Boston, U.S.A. in August! (Register now)

-

FOSS4G Abstract Review

Written by: Michael Terner · Posted on: April 10, 2017

An embarrassment of riches, and difficult cuts

Full disclosure, I write this blog not just as the FOSS4G Boston 2017 Conference Chairman and a member of our program committee but also as a member of two previous FOSS4G North America program committees and as someone who has had an abstract rejected from a FOSS4G event (and several others accepted). I also write this from my own perspective and I do not speak on behalf of the entire program committee.

There’s an old saying that goes “careful of what you wish for” and I think our Program Committee is feeling that strongly. On the Friday four days prior to the Call for Presentations deadline, our committee knew that 90 abstracts had been submitted, and we wished mightily for many more (knowing we had room for over 200 talks). Well our wish came true and over those last four days we received 336 more abstracts for a total of 416 (and we received several requests to consider abstracts from people who missed the deadline). This was great news, but the “careful what you wish for” part is being felt in two large ways:

- We have a lot more work to do. Spending 5 minutes per abstract 416 times adds up to over 34 hours for each reviewer. And, reading and scoring is just the start of the selection process.

- After reading just the first several dozen it was clear that the general quality of abstracts was very high. The number of rooms we have and the general program that has been laid out tell us that somewhere between 175 - 200 abstracts will need to be rejected.

As such, we anticipate that the decision making process is going to be arduous, complicated and emotionally difficult knowing that many papers won’t be accepted. We also know that a lot of work went into creating abstracts and that in some cases having an abstract accepted impacts one’s ability to attend the conference. Given these realities and in the spirit of free and open communication I thought it might be useful to share some of the things the program committee thought about in preparing our Call for Presentations and also how we are entering the deliberation phase of the process.

First, and most importantly, the Boston team has a vision for the kind of program that we believe will underpin a great event and attract the largest and widest audience to Boston in August. We also want an event that will help to strengthen the OSGeo community. We laid that vision out in our Call for Presentations (which has now been taken offline, but can be found here, and some of that outlook remains on the Program Page of the website.

The basics of what we are looking for:

- A comprehensive program that is attractive to developers and users of Open Source. A program with content for both advanced, long-time users as well as novices and those just “checking out” FOSS4G software.

- Content that represents the wide variety of uses of FOSS4G software from government to private industry; from transportation to economic development to emergency response.

- A diversity of voices, from experienced FOSS4G presenters to those new to the community, and including a cross section of gender, geography, and ethnicity.

- Representation from the emerging business ecosystem that surrounds the FOSS4G community. How are people creating businesses that employ or support FOSS4G?

Beyond finding content to help us realize our vision for the conference there are other important aspects that need to be considered in making the individual acceptance decisions.

- Quality matters The writing and descriptions in the abstracts are extremely important in helping us imagine what the presentation will be like.

- Managing fairness of quantity matters There are a large number of individuals and organizations who submitted more than one abstract (i.e., over 60 people submitted >1 abstract, and 16 organizations submitted four, or more abstracts). While there will undoubtedly be some individuals who have >1 abstract accepted we are mindful that it is challenging to reject someone’s single entry while accepting multiple entries from others.

- Respecting community voting matters We have asked the OSGeo Community to vote for what you want to see. And, we will respect that and approximately 20% of the program will be chosen directly by the top community voting results. And, for all other decisions, the community vote will be considered as one of several important variables. That said, we are also mindful that the community vote is not necessarily a representative sample of those who will attend the conference.

In short, we have a fantastic group of Program Committee volunteers who are working extremely hard on this process and recognize the gravity of the decisions we are making. We are all human and fallible and we will do the best we possibly can. It is also fair to recognize that it will be easy to second guess our decisions and that invariably we may make some mistakes. We ask that this community be empathetic and patient with us and the task ahead.

Here is what comes next:

- Our committee has committed to trying to complete all first pass reviews of all 416 abstracts by Monday, April 10th (i.e., the same day as community voting closes).

- Our committee is working to make some early acceptance announcements, potentially before community voting closes as there is already some strong agreement on several abstracts. We believe this is helpful as it allows the authors of accepted abstracts the opportunity to begin their next level of planning to attend. And, at the same time it helps others get a better sense of the emerging program and have more information to base their own decisions on attending, and potentially making earlier travel arrangements and registration. Once the community voting is closed, we will try to make those early announcements quickly, and no later than Friday, April 14.

- We will then continue to make incremental acceptance announcements on a regular basis to continue revealing the program as early as we can.

- The last round of final announcements will be made on Monday, May 1.

Thanks to the entire FOSS4G community for your support.